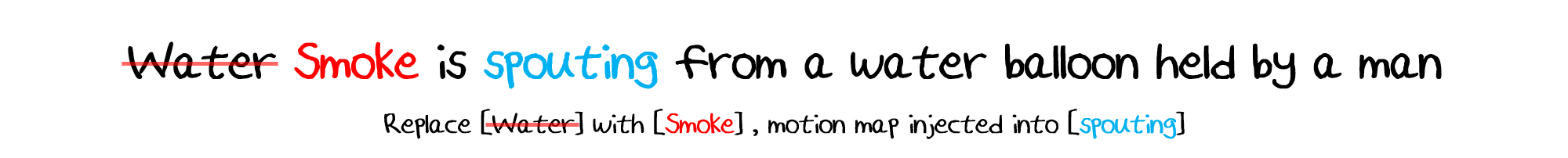

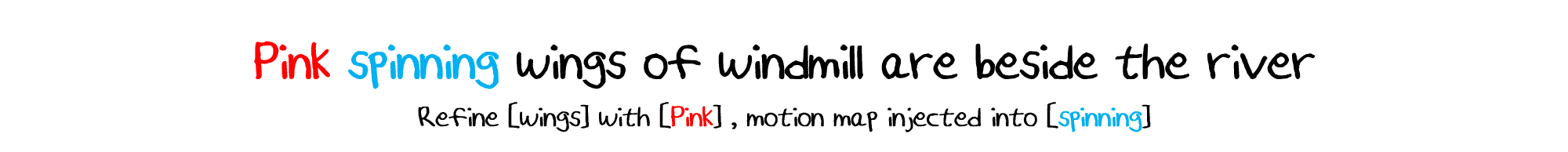

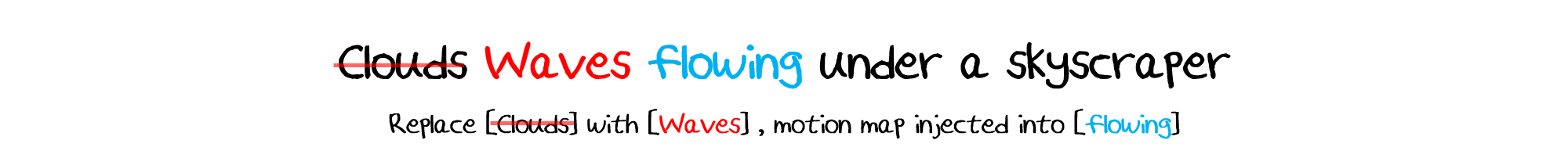

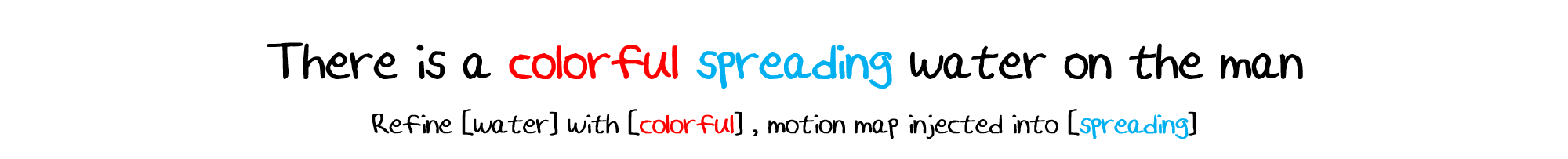

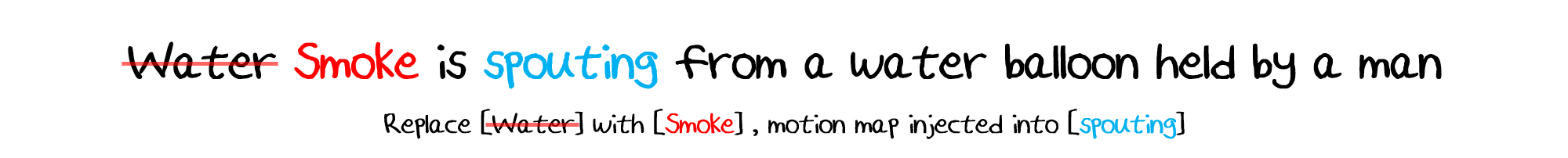

Enhancing Text-to-Video Editing with Motion Map Injection

Seong-Hun Jeong*,

In-Hwan Jin*,

Haesoo Choo*,

Hyeonjun Na*,

and Kyeongbo Kong

Pukyong National University, Pusan National University

Creative Video Editing and Understanding (CVEU), 2023 Oral Presentation

*Indicates Equal Contribution